Machine learning (ML) and artificial intelligence (AI) technologies, often collectively referred to as AI technologies, are among the most heavily invested areas in modern times. It is predicted that in the next few years, AI technologies and capabilities will be integrated into a large number of edge devices and autonomous systems, and cloud-based and generative AI services will continue to increase.

However, the AI boom has not been entirely smooth sailing. In many ways, deep neural network (DNN) technologies such as large language models (LLM), natural language processing, speech recognition, reinforcement learning, and other systems use large amounts of storage, memory, and data processing as a shortcut to creating effective AI technologies.

The premise here is that larger training sets and computational resources can be used to create more accurate and useful models faster than earlier approaches to ML/AI model development that relied on mathematical efficiency and optimization. According to an analysis published by OpenAI, the amount of computing resources used in AI development doubles every 3.4 months, while Moore's Law advances in computing power only double every two years. Therefore, at some point, the increase in computing power will not meet the needs of the current AI training and reasoning paradigm.

To remain competitive and implement AI technology at the edge, this approach requires trade-offs to meet the size, weight, cost, and energy consumption requirements of the edge system. Some of these trade-offs include lowering the resolution of the data used in digital AI models. In addition, reducing resolution also has practical limits in terms of saving AI energy and reducing complexity. Gpus have become a popular choice for performing large matrix operations required for AI training and inference tasks because Gpus are more powerful and energy efficient than cpus at performing large matrix calculations.

However, for numerical computation methods based on von Neumann structures, the processing speed of these systems is inevitably limited in practical applications. This is a phenomenon known as the von Neumann bottleneck, in which processing speed is limited by the rate at which data is transferred from memory to the processing unit.

Figure 1: shows how the traditional von Neumann structure becomes a bottleneck due to the large amount of data movement

Debut of simulated AI

This fact is why IBM and other AI technology companies such as Mythic AI are betting on simulation AI as the basis for training and reasoning on edge AI in the future. According to industry insiders, the speed and efficiency of analog AI technology may be tens to hundreds of times faster than digital AI technology, which will greatly improve the AI processing power of energy-limited edge devices.

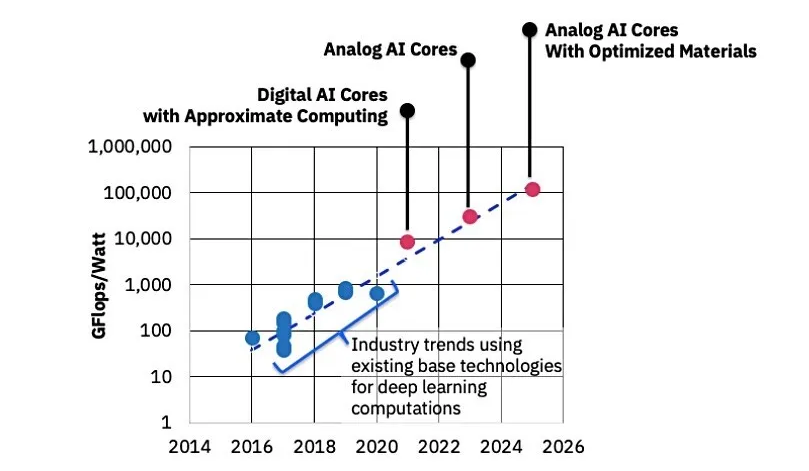

Figure 2: This chart compares current and future digital AI and analog AI hardware technologies and their performance per watt, from 2014 to 2026.

Digital processing techniques rely on data encapsulated as discrete values, with each bit stored in a designated transistor or storage unit, while analog processing techniques can take advantage of continuous information stored in a single transistor or storage unit. This feature alone allows analog processing techniques to store more data in a smaller space, but also sacrifices the variability of the stored data through the introduction of random errors.

The variability of analog storage can lead to forward propagation (inference) mismatch errors, as well as backpropagation (training calculation errors). Neither is desirable, but such errors can be addressed using digital and analog circuits to ensure minimal errors, and other AI training techniques can be used to reduce backpropagation errors.

For artificial neural networks (ANN), this variability can now be managed. Historically, the variability of analog storage was the reason why digital computing technology initially replaced analog computing technology more than half a century ago, as most computing systems required greater accuracy.

The advantages of simulated AI

Other advantages of analog computing for ANN include the multiplicative accumulation operation, which is the most common operation in ANN computing and can be done using physical properties in electrodynamics, such as the multiplication of Ohm's law and the accumulation of Kirchhoff's law. In this way, the analog computer can process the input as an array and perform full matrix operations in parallel. This is much faster and more efficient than using a CPU or even a GPU for matrix calculations.

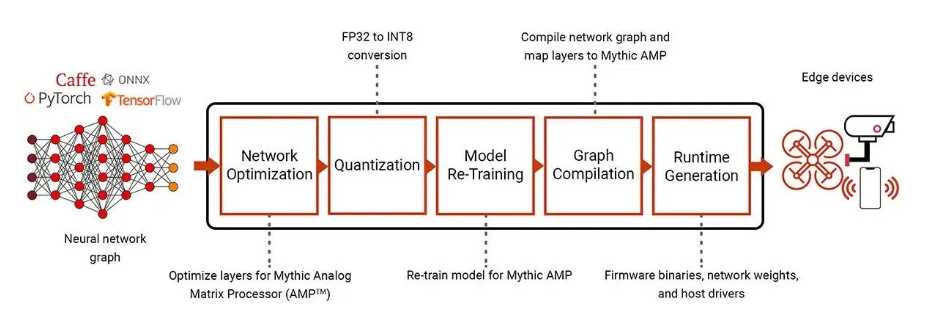

Figure 3: Deploying AI workflows built around standard frameworks such as Pytorch, Caffe, and TensorFlow using an analog matrix processor.

Another advantage of analog computing technology is that these solutions can take advantage of different memory cell technologies, such as phase change materials (PCM) and digital flash memory that operates as variable resistors rather than switches. These analog storage methods allow computation and storage to take place in the same location without the need for continuous use of power to maintain data storage.

These factors mean that there is no von Neumann bottleneck in analog computing/storage, and analog data storage is inherently passive, consuming far less energy in the long run than active digital data storage. For example, PCM works on the principle that the conductivity of a material is a function of the ratio of amorphous to crystalline states within the PCM storage cell.

For IBM's PCM technology, a lower programming current state results in lower resistance and more crystalline structure, while a higher programming current produces an amorphous material with higher resistance. This is why PCM data storage is relatively non-volatile, and why ANN synaptic weights can be stored continuously between the upper and lower conductance limits of a single PCM cell, rather than storing bits on multiple transistors or other digital storage units.

Therefore, the emergence of analog AI technology is not surprising for edge computing reasoning and AI training on energy/process-constrained systems, as this solution can bring the benefits of DNNS without access to extensive cloud AI infrastructure and Internet connectivity. This will lead to more responsive, efficient, and capable edge AI that is better suited for autonomous applications such as robotics, fully autonomous driving, safe work, and even Cognitive radio/communications.

About US

Heisener Electronic is a famous international One Stop Purchasing Service Provider of Electronic Components. Based on the concept of Customer-orientation and Innovation, a good process control system, professional management team, advanced inventory management technology, we can provide one-stop electronic component supporting services that Heisener is the preferred partner for all the enterprises and research institutions.